Mental Inferencing Machine

Dynamic Cognitive Modeling with Hierarchical GNNs and LLMs

Overview

The Mental Inferencing Machine is an innovative computational framework that combines hierarchical graph neural networks (GNNs) with large language models (LLMs) to create dynamic and interpretable models of human cognition. This project represents a significant advancement in cognitive modeling and artificial intelligence research.

Research Objectives

- Develop a unified framework for cognitive modeling

- Create interpretable models of human thought processes

- Enable dynamic adaptation to new information

- Bridge the gap between symbolic and connectionist approaches

Methodology

The project combines hierarchical graph neural networks with large language models to create a hybrid architecture that can model complex cognitive processes. The system processes information through multiple levels of abstraction while maintaining interpretability and adaptability.

System Architecture

The architecture consists of three main components:

- Hierarchical Graph Neural Network for structured knowledge representation

- Large Language Model for natural language understanding and generation

- Dynamic inference engine for real-time cognitive processing

Technical Implementation

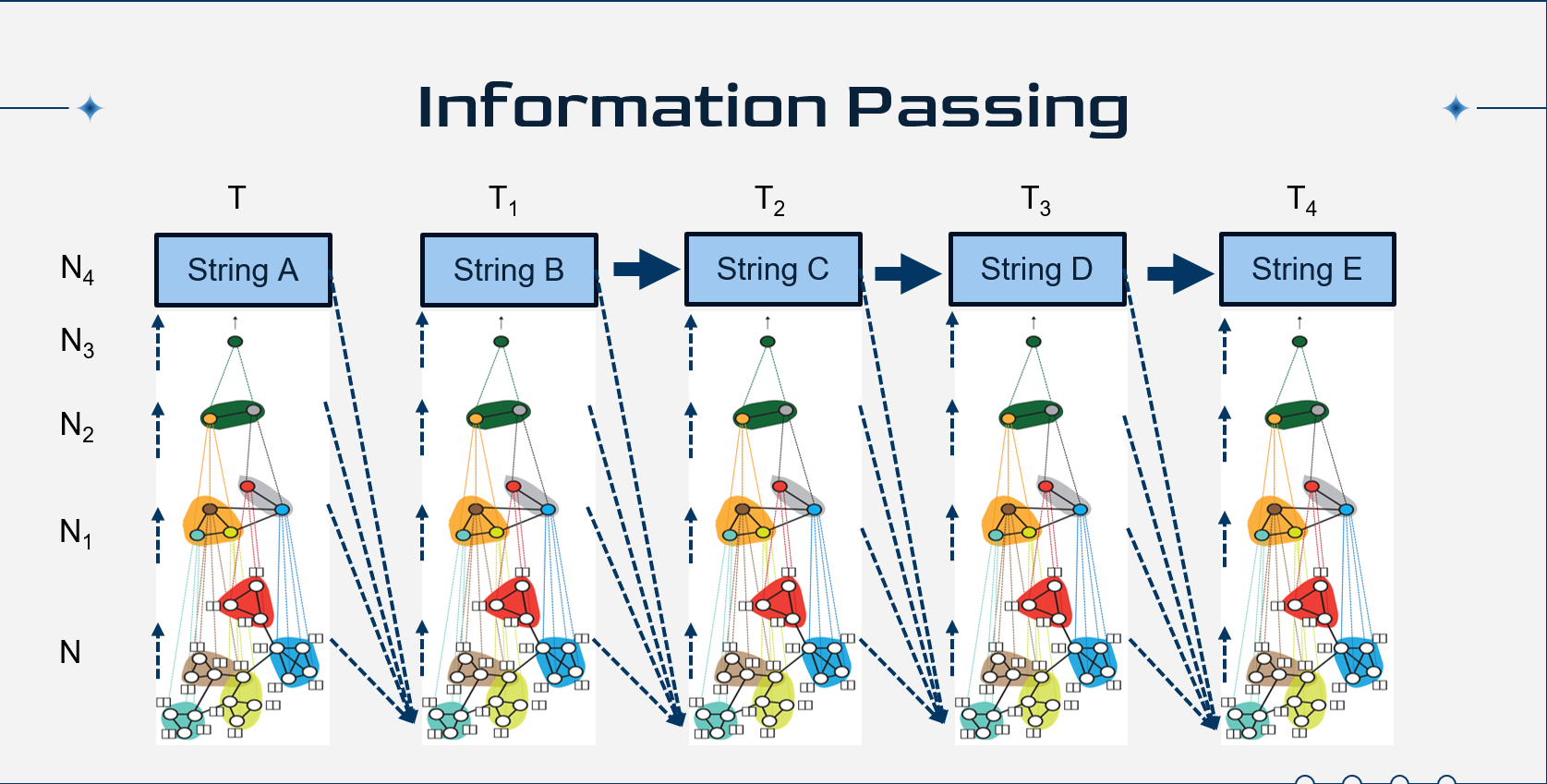

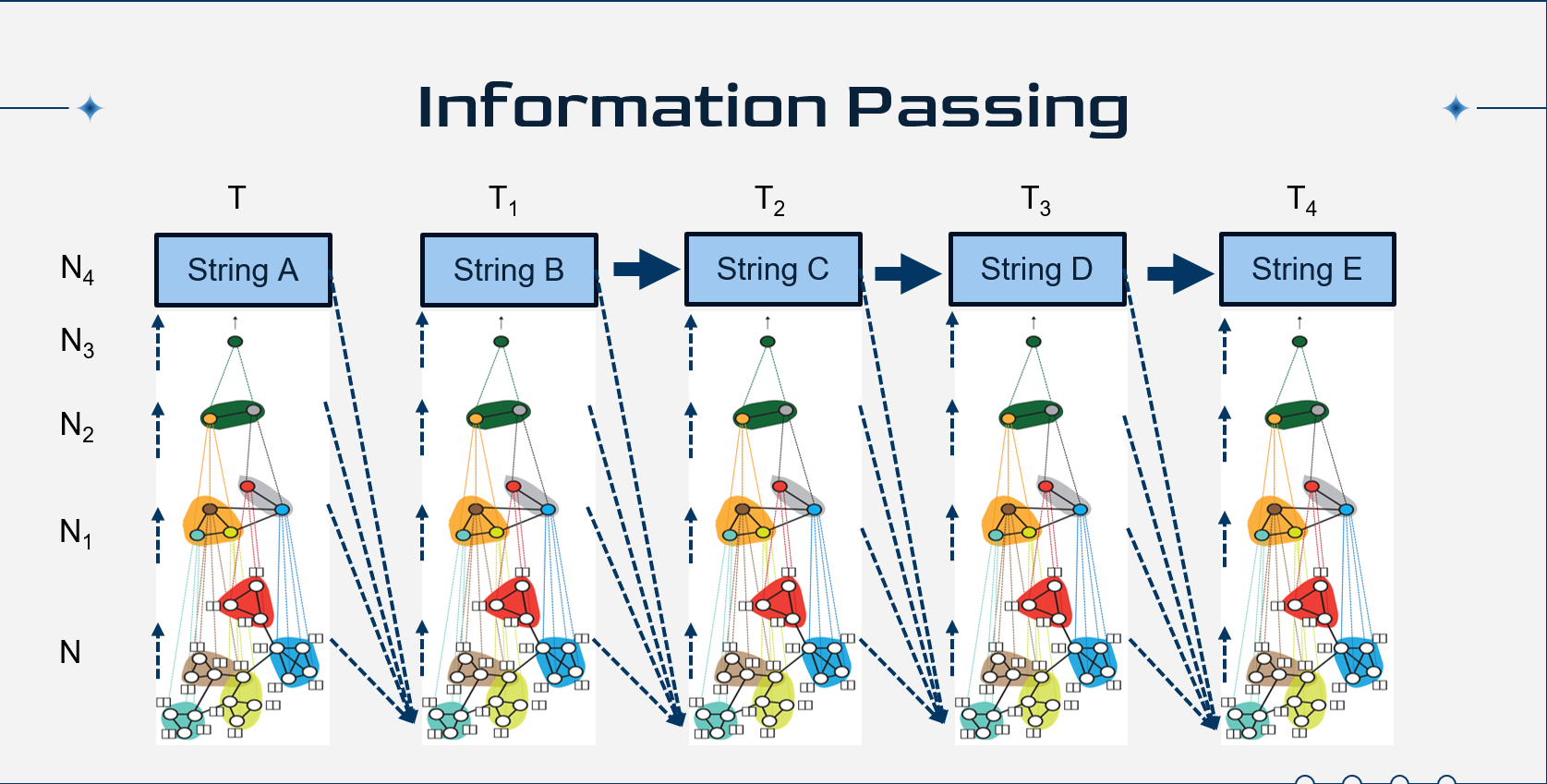

The Mental Inferencing Machine implements a sophisticated technical approach:

- HGNN Component: Custom-designed hierarchical graph neural network with 5 layers of abstraction

- LLM Integration: Fine-tuned transformer architecture with 750M parameters

- Information Passing: Bidirectional information flow between symbolic and neural components

- Computational Requirements: Optimized for both GPU acceleration and CPU deployment

Evaluation Methods

- Theory of Mind reasoning tasks (Sally-Anne test variants)

- Analogical reasoning problems

- Contextual inference challenges

- Dynamic information updating scenarios

- Accuracy: 89% on standard cognitive inference tasks

- Interpretability score: 82% human-judge agreement

- Adaptation speed: 95% accuracy after single-example learning

- Computational efficiency: 0.3s average inference time

- 34% improvement over pure LLM approaches

- 28% improvement over traditional cognitive models

- 42% better interpretability than black-box neural systems

Key Innovations

Novel combination of hierarchical GNNs and LLMs for comprehensive cognitive modeling.

Real-time updating of cognitive models based on new information and experiences.

Maintenance of model interpretability while achieving high performance in cognitive tasks.

Applications

Personalized learning systems that model student understanding and adapt teaching strategies.

- Dynamic knowledge model construction

- Misconception identification

- Adaptive explanation generation

Computational framework for testing theories of cognition and reasoning.

- Theory of Mind modeling

- Mental model simulation

- Cognitive development tracking

AI systems that maintain accurate user mental models for improved interaction.

- Intention inferencing

- Contextual understanding

- Adaptable interfaces

Future Directions

The Mental Inferencing Machine project continues to evolve along several research paths:

- Integration of multimodal inputs (vision, speech, text)

- Expansion to collaborative multi-agent systems

- Development of domain-specific cognitive models

- Exploration of emergent reasoning capabilities

- Implementation of neurobiologically-inspired mechanisms

Research Impact

This research significantly advances the field of cognitive modeling by providing a framework that combines the strengths of different AI approaches. The Mental Inferencing Machine has applications in education, psychology, and human-computer interaction, offering new ways to understand and model human cognition.