Leveraging Hierarchical Graph Attention Networks for Mental Representation Inferencing

Advanced Neural Architectures for Cognitive Modeling

Abstract

This research introduces a novel approach to modeling human mental representations using hierarchical graph attention networks (HGANs). Traditional models often fail to capture the dynamic, multi-level nature of cognitive representations. Our architecture addresses this limitation by modeling the hierarchical organization of knowledge and supporting interpretable inference generation. We demonstrate the effectiveness of our approach in predicting human comprehension patterns and mental model construction.

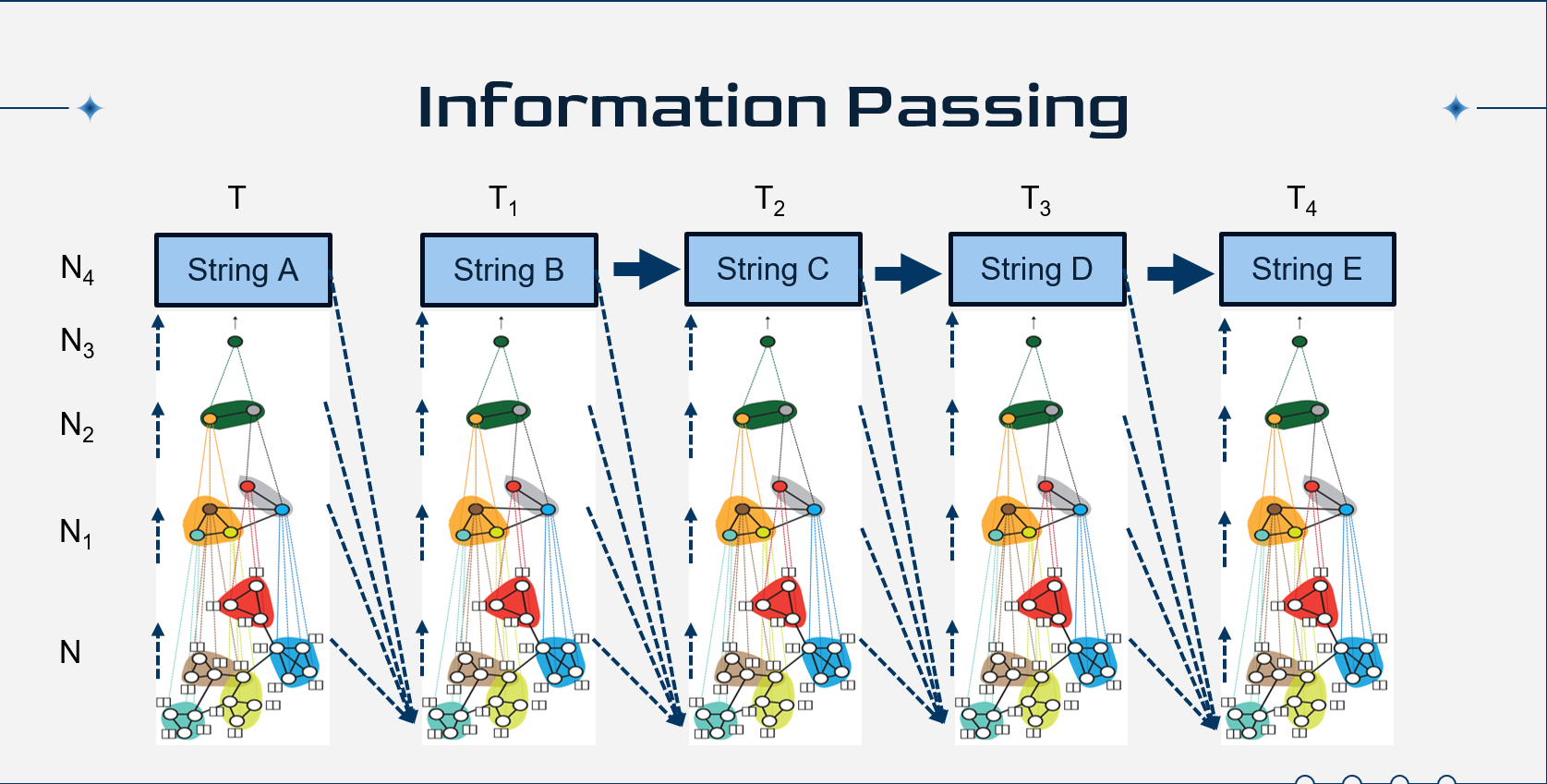

Figure 1: Information flow in our Hierarchical Graph Attention Network model

Key Innovations

- Multi-level Knowledge Representation - Models both low-level linguistic features and high-level semantic structures

- Dynamic Attention Mechanisms - Adapts attention weights based on context and task demands

- Interpretable Architecture - Provides transparent insights into the inferencing process

- Cognitive Alignment - Designed to mirror human mental model construction processes

Technical Approach

Our architecture employs a multi-level graph structure where nodes represent different knowledge units (from words to concepts to schemas) and edges represent relationships between these units. The attention mechanism dynamically weights different parts of this graph based on the specific inferencing task. This allows the model to focus on relevant information while maintaining the overall knowledge structure.

A key advantage of our approach is its interpretability. By examining the attention weights at different levels of the hierarchy, we can understand which knowledge components are most important for different types of inferences. This provides valuable insights not only for AI model development but also for cognitive science research on human mental representations.

Experimental Results

Experiments on reading comprehension tasks demonstrate that our HGAN model outperforms traditional approaches in predicting human inference patterns. The model also generates more human-like explanations for its inferences, as evaluated by cognitive science experts. These results suggest that hierarchical graph attention networks provide a promising framework for modeling the complex, multi-level nature of human mental representations.