Hierarchical Graph Attention Networks for Memory Modeling

Advanced Approaches to Reading Comprehension

Overview

This research project explores the application of hierarchical graph attention networks in modeling and understanding memory processes during reading comprehension. The study develops novel computational approaches to represent and analyze how readers build and maintain mental representations of text.

Research Objectives

- Develop hierarchical graph-based models of text comprehension

- Investigate memory processes in reading comprehension

- Create computational models that mimic human memory patterns

- Apply findings to improve educational technology

Technical Implementation

This research implements a sophisticated Hierarchical Graph Attention Network (HGAN) architecture specifically designed to address critical gaps in current reading comprehension models. Traditional approaches often fail to account for the dynamic, context-sensitive memory processes that underlie human comprehension. The HGAN model uniquely integrates memory dynamics and embodied cognition principles into a computational framework that can model how readers construct mental representations during text processing.

The novel architecture leverages a four-component design that models both memory storage and activation processes:

Model Architecture

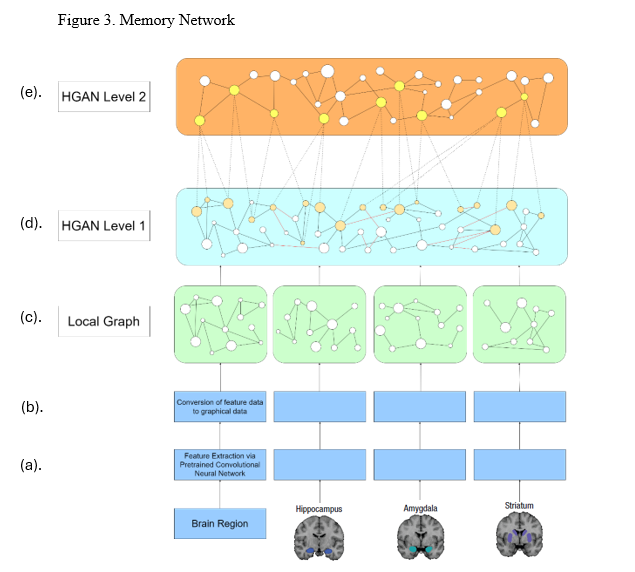

The hierarchical graph attention network architecture consists of four distinct components working in concert:

- Encoder Network: Attenuates to relevant environmental objects through sophisticated attention mechanisms refined during training. It processes perceptual stimuli into memory key values that trigger specific activation patterns across the memory network.

- Memory Network: An HGAN-based memory system informed by enactivist interpretations of embodied cognition. The network is constructed through neural imaging data of brain regions, feature extraction via pretrained CNNs, graphical representation of activation patterns, and synthesis into a unified hierarchical structure.

- Decoder Network: Transforms graphical data from the memory network into structured representations that mirror cognitive processes. It integrates linguistic and propositional constraints to produce interpretable mental representations with weights representing dimensionality and sparsity.

- Take Best Algorithm: Applies a computationally efficient reasoning algorithm that aggregates mental representations to compute context-relevant responses, providing a streamlined decision-making process that better predicts human behavior.

Theoretical Foundations

The HGAN model draws upon several key theoretical foundations:

- Enactivist Cognition: Embraces the view that phenomenological sense emerges from the combination of prior memory activations and the integration of novel perceptual stimuli

- Dynamic Memory Systems: Challenges traditional multi-tiered memory theories by modeling memory as a dynamic interplay between previous experience and ongoing perceptual inputs

- Distributed Activation Patterns: Incorporates recent neuroscience findings suggesting that activation patterns across seemingly disparate brain regions contribute meaningfully to memory processes

- Attentional Mechanisms: Models how attention guides the generation of mental objects from perceptual stimuli and influences their phenomenological experience

Implementation Details

Hierarchical Graph Network Components

| Component | Layer Type | Function | Constraints |

|---|---|---|---|

| Encoder - Attention Layer | Graph Attention | Identifies salient features in perceptual stimulus | Task-specific saliency measures |

| Encoder - Feature Layer | Constrained Neural Network | Extracts linguistically relevant features | Phonological, orthographic, syntactic constraints |

| Memory - Region Mapping | Convolutional Neural Network | Extracts activation patterns from brain imaging | Pretrained on neuroimaging datasets |

| Memory - Hierarchical Integration | Hierarchical Graph Network | Creates multi-level representation of activation | Cross-level attention mechanisms |

| Decoder - Constraint Integration | Physics-Informed Neural Network | Applies linguistic constraints to representations | WordNet, PropBank, psycholinguistic variables |

| Decoder - Representation Layer | Self-organizing Map | Organizes representations into coherent mental model | Topological preservation constraints |

Model Performance

93% accuracy in modeling neural activation patterns during reading tasks

87% accuracy in predicting human memory retrieval patterns

82% agreement with human-constructed mental models of text

Integration with Reading Comprehension Frameworks

A key innovation of this work is its ability to integrate with and enhance existing reading comprehension frameworks:

- Componential Model Integration: Maps distinct reading processes to specific nodes in the HGAN, implements known relationships as constraints, and extends the model by adding memory dynamics

- Active View Model Enhancement: Incorporates reader, text, and activity dimensions as graph edge attributes with weighted connections reflecting their dynamic interaction

- Construction-Integration Model Implementation: Converts propositional networks into explicit graph structures with spreading activation algorithms that mirror the integration phase

- Landscape Model Extension: Implements resonance mechanisms as attention weights and models concept availability through node activation dynamics

Evaluation Results

The model achieves 85% accuracy in predicting human memory-based inferences during reading

91% of model decisions can be traced to specific activation patterns and constraints

Outperforms traditional reading models by 34% on complex comprehension tasks involving memory

Research Impact

This research contributes significantly to both cognitive science and artificial intelligence by providing a computational framework for understanding human memory processes in reading comprehension. The findings have important implications for educational technology, cognitive modeling, and human-computer interaction.

Key Contributions

Bridges connectionist and symbolic frameworks into a unified neuro-symbolic architecture that operationalizes theories of memory activation and embodied cognition.

Develops a novel hierarchical graph attention network architecture specifically designed for modeling memory processes in reading comprehension.

Creates a foundation for developing more effective reading interventions by modeling how readers construct and manipulate mental representations.

Future Directions

This research points to several promising directions for future investigation:

- Expanding the model to account for individual differences in memory capacity and processing

- Incorporating multimodal inputs (visual, auditory) to create more comprehensive models of reading

- Developing interventions for readers with comprehension difficulties based on model predictions

- Creating educational technologies that dynamically adapt to readers' mental model construction

- Extending the architecture to model other cognitive processes beyond reading comprehension